8-23 26 views

master构筑见:使用kubeadm工具快速安装kubernetes集群-master(1.11.2)

Node构筑见:使用kubeadm工具快速安装Kubernetes集群-node(1.11.2)

Docker私有仓库构筑见:CentOS7下搭建Docker私有仓库

Docker私有仓库在k8s集群中的使用:在Kubernetes集群中使用私有仓库(1.11.2)

1. 为什么要使用Prometheus

Prometheus在2016加入 CNCF ( Cloud Native Computing Foundation ), 作为在kubernetes之后的第二个由基金会主持的项目,Prometheus的实现参考了Google内部的监控实现,与源自Google的Kubernetes结合起来非常合适。另外相比influxdb的方案,性能更加突出,而且还内置了报警功能。它针对大规模的集群环境设计了拉取式的数据采集方式,只需要在应用里面实现一个metrics接口,然后把这个接口告诉Prometheus就可以完成数据采集了。

2. 环境

CentOS: 7.5

Docker: 1.13

k8s: 1.11.2

Prometheus: 10.9.54.19/prom/prometheus:v2.2.0

Node Exporter: 10.9.54.19/prom/node-exporter:latest

Grafana: 10.9.54.19/grafana/grafana:4.2.0

Docker hub:10.9.54.19

Master节点:10.9.54.20

Node1节点:10.9.54.21

Node2节点:10.9.54.22

3. 部署

3.1. Node Exporter

Prometheus社区提供的NodeExporter项目可以对于主机的关键度量指标状态监控,通过Kubernetes的DeamonSet我们可以确保在各个主机节点上部署单独的NodeExporter实例,从而实现对主机数据的监控。

3.1.1. 创建Pod

定义YAML文件

[root@k8s-master ~]# vim node-exporter.yml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

--- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: node-exporter namespace: kube-system labels: k8s-app: node-exporter spec: template: metadata: labels: k8s-app: node-exporter spec: containers: - image: 10.9.54.19/prom/node-exporter name: node-exporter ports: - containerPort: 9100 protocol: TCP name: http |

执行kubectl create 创建Pod

|

1 2 |

[root@k8s-master ~]# kubectl create -f node-exporter.yml daemonset.extensions/node-exporter created |

3.1.2. 创建Service

定义Service Yaml

[root@k8s-master ~]# vim node-exporter.svc.yml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

--- apiVersion: v1 kind: Service metadata: labels: k8s-app: node-exporter name: node-exporter namespace: kube-system spec: ports: - name: http port: 9100 nodePort: 31672 protocol: TCP type: NodePort selector: k8s-app: node-exporter |

执行kubectl create 创建Service

|

1 2 |

[root@k8s-master ~]# kubectl create -f node-exporter.svc.yml service/node-exporter created |

3.2. Prometheus

3.2.1. RBAC

在Kubernetes1.6版本中新增角色访问控制机制RBAC(Role-Based Access Control)让集群管理员可以针对特定使用者或服务账号的角色,进行更精确的资源访问控制。在RBAC中,权限与角色相关联,用户通过成为适当角色的成员而得到这些角色的权限。这就极大地简化了权限的管理。在一个组织中,角色是为了完成各种工作而创造,用户则依据它的责任和资格来被指派相应的角色,用户可以很容易地从一个角色被指派到另一个角色。

RBAC 的授权策略可以利用 kubectl 或者 Kubernetes API 直接进行配置。RBAC 可以授权给用户,让用户有权进行授权管理,这样就可以无需接触节点,直接进行授权管理。RBAC 在 Kubernetes 中被映射为 API 资源和操作。

定义YAML文件

[root@k8s-master ~]# vim prometheus.rbac.yml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

--- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: [""] resources: - nodes - nodes/proxy - services - endpoints - pods verbs: ["get", "list", "watch"] - apiGroups: - extensions resources: - ingresses verbs: ["get", "list", "watch"] - nonResourceURLs: ["/metrics"] verbs: ["get"] --- apiVersion: v1 kind: ServiceAccount metadata: name: prometheus namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: kube-system |

注:ClusterRoleBinding 用于授权集群范围内所有命名空间的权限

|

1 2 3 4 |

[root@k8s-master ~]# kubectl create -f prometheus.rbac.yml clusterrole.rbac.authorization.k8s.io/prometheus created serviceaccount/prometheus created clusterrolebinding.rbac.authorization.k8s.io/prometheus created |

3.2.2. 利用ConfigMap管理Prometheus的配置

在Kubernetes 1.2版本后引入ConfigMap来处理这种类型的配置数据。

ConfigMap是存储通用的配置变量的,类似于配置文件,使用户可以将分布式系统中用于不同模块的环境变量统一到一个对象中管理,而它与配置文件的区别在于它是存在集群的”环境”中的,并且支持K8S集群中所有通用的操作调用方式。

从数据角度来看,ConfigMap的类型只是键值组,用于存储被Pod或者其他资源对象(如RC)访问的信息。这与secret的设计理念有异曲同工之妙,主要区别在于ConfigMap通常不用于存储敏感信息,只存储简单的文本信息。

ConfigMap可以保存环境变量的属性,也可以保存配置文件。创建pod时,对ConfigMap进行绑定,pod内的应用可以直接引用ConfigMap的配置。相当于ConfigMap为应用/运行环境封装配置。

定义ConfigMap

[root@k8s-master ~]# vim prometheus.configmap.yml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 |

apiVersion: v1 kind: ConfigMap metadata: name: prometheus-config namespace: kube-system data: prometheus.yml: | global: scrape_interval: 15s evaluation_interval: 15s scrape_configs: - job_name: 'kubernetes-apiservers' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-nodes' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics - job_name: 'kubernetes-cadvisor' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name - job_name: 'kubernetes-services' kubernetes_sd_configs: - role: service metrics_path: /probe params: module: [http_2xx] relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__address__] target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] target_label: kubernetes_name - job_name: 'kubernetes-ingresses' kubernetes_sd_configs: - role: ingress relabel_configs: - source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path] regex: (.+);(.+);(.+) replacement: ${1}://${2}${3} target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_ingress_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_ingress_name] target_label: kubernetes_name - job_name: 'kubernetes-pods' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name |

|

1 2 |

[root@k8s-master ~]# kubectl create -f prometheus.configmap.yml configmap/prometheus-config created |

3.2.3. Deployment

定义YAML文件

[root@k8s-master ~]# vim prometheus.deploy.yml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

--- apiVersion: apps/v1beta2 kind: Deployment metadata: labels: name: prometheus-deployment name: prometheus namespace: kube-system spec: replicas: 1 selector: matchLabels: app: prometheus template: metadata: labels: app: prometheus spec: containers: - image: 10.9.54.19/prom/prometheus:v2.2.0 name: prometheus command: - "/bin/prometheus" args: - "--config.file=/etc/prometheus/prometheus.yml" - "--storage.tsdb.path=/prometheus" - "--storage.tsdb.retention=24h" ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: "/prometheus" name: data - mountPath: "/etc/prometheus" name: config-volume resources: requests: cpu: 100m memory: 100Mi limits: cpu: 500m memory: 2500Mi serviceAccountName: prometheus volumes: - name: data emptyDir: {} - name: config-volume configMap: name: prometheus-config |

创建Prometheus的Deployment

|

1 2 |

[root@k8s-master ~]# kubectl create -f prometheus.deploy.yml deployment.apps/prometheus created |

3.2.4. Service

定义YAML文件

[root@k8s-master ~]# vim prometheus.svc.yml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

--- kind: Service apiVersion: v1 metadata: labels: app: prometheus name: prometheus namespace: kube-system spec: type: NodePort ports: - port: 9090 targetPort: 9090 nodePort: 30003 selector: app: prometheus |

创建Prometheus的Service

|

1 2 |

[root@k8s-master ~]# kubectl create -f prometheus.svc.yml service/prometheus created |

3.2.5. 测试

Pods

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

[root@k8s-master ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE default mysql-27k5w 1/1 Running 0 1d default mysql-jrnxf 1/1 Running 0 1d default myweb-5gxdt 1/1 Running 0 15h default myweb-9v76s 1/1 Running 0 1d default myweb-btl7l 1/1 Running 0 1d default myweb-kr4v2 1/1 Running 0 1d default myweb-qczmr 1/1 Running 0 15h default myweb-znjxf 1/1 Running 0 1d kube-system coredns-78fcdf6894-f29c5 1/1 Running 1 2d kube-system coredns-78fcdf6894-gcfcr 1/1 Running 1 2d kube-system etcd-k8s-master 1/1 Running 2 2d kube-system kube-apiserver-k8s-master 1/1 Running 2 2d kube-system kube-controller-manager-k8s-master 1/1 Running 2 2d kube-system kube-proxy-7s7xp 1/1 Running 1 2d kube-system kube-proxy-9cjrs 1/1 Running 1 2d kube-system kube-proxy-vccsr 1/1 Running 1 1d kube-system kube-scheduler-k8s-master 1/1 Running 2 2d kube-system node-exporter-fk8sx 1/1 Running 0 11h kube-system node-exporter-qb89f 1/1 Running 0 11h kube-system prometheus-77bbb69b5-7j2h8 1/1 Running 0 11h kube-system weave-net-58wnh 2/2 Running 6 2d kube-system weave-net-dn6bd 2/2 Running 2 1d kube-system weave-net-rs6qh 2/2 Running 5 2d |

Service

|

1 2 3 4 5 6 |

[root@k8s-master ~]# kubectl get svc --all-namespaces NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 2d kube-system node-exporter NodePort 10.97.207.196 <none> 9100:31672/TCP 11h kube-system prometheus NodePort 10.99.42.107 <none> 9090:30003/TCP 11h |

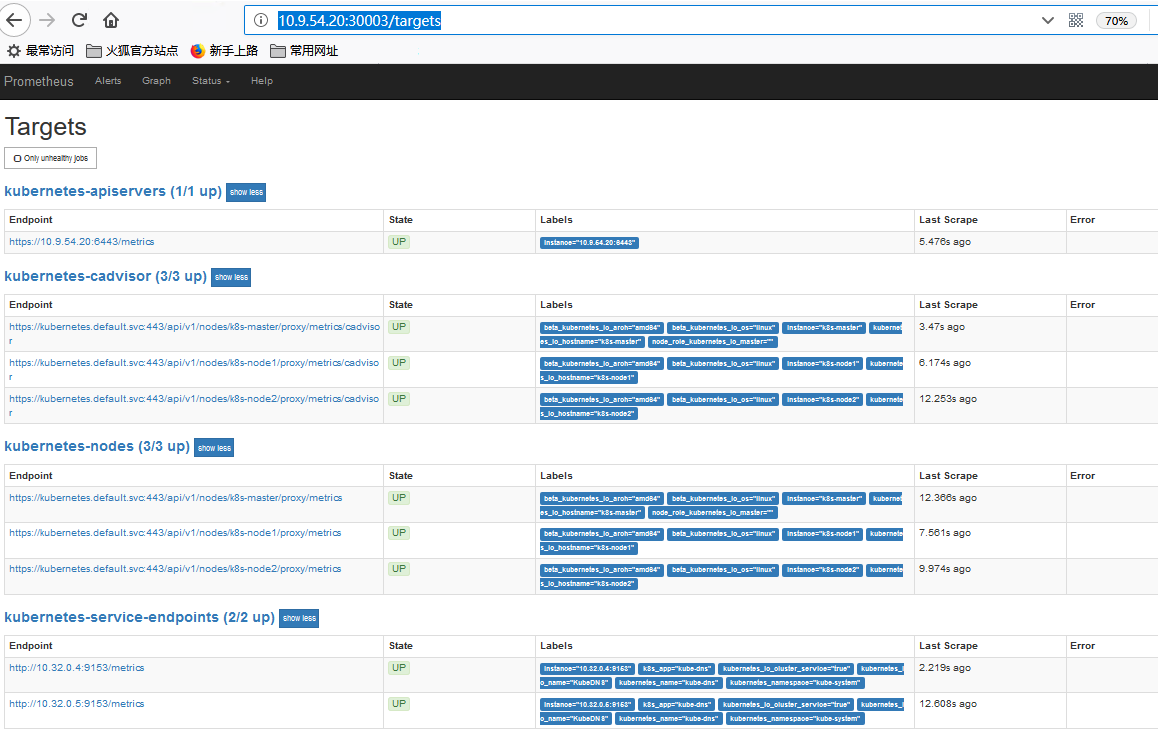

Prometheus对应的Node Port是30003,通过浏览器访问http://10.9.54.20:30003/targets,查看Prometheus连接k8s的api server的状态

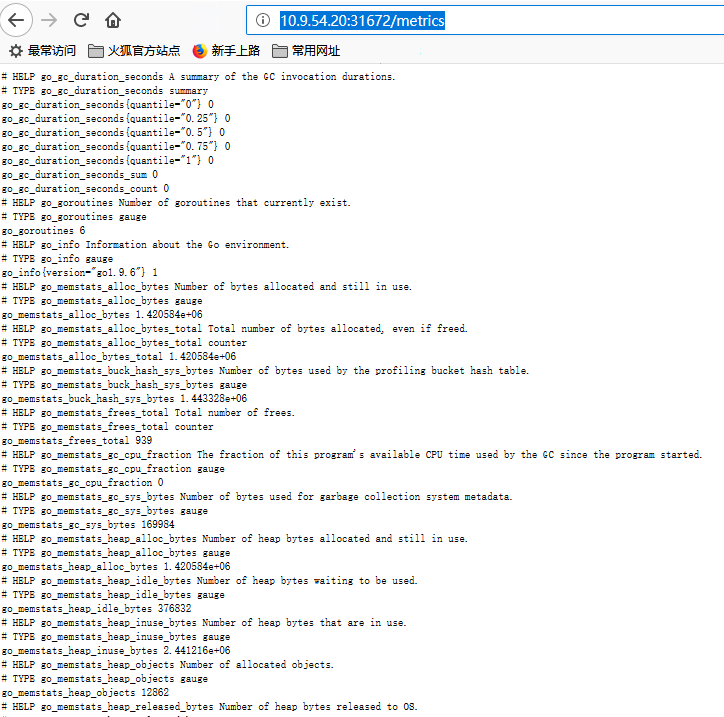

Node Exporter对应的Node Port是31672,通过浏览器访问http://10.9.54.20:31672/metrics,查看metrics接口获取到所有容器相关的性能指标数据

3.3. Grafana

3.3.1. Deployment

定义YAML文件

[root@k8s-master ~]# vim grafana.deploy.yml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

apiVersion: extensions/v1beta1 kind: Deployment metadata: name: grafana-core namespace: kube-system labels: app: grafana component: core spec: replicas: 1 template: metadata: labels: app: grafana component: core spec: containers: - image: 10.9.54.19/grafana/grafana:4.2.0 name: grafana-core imagePullPolicy: IfNotPresent resources: limits: cpu: 100m memory: 100Mi requests: cpu: 100m memory: 100Mi env: - name: GF_AUTH_BASIC_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ENABLED value: "false" readinessProbe: httpGet: path: /login port: 3000 volumeMounts: - name: grafana-persistent-storage mountPath: /var volumes: - name: grafana-persistent-storage emptyDir: {} |

创建Grafana的Deployment

|

1 2 |

[root@k8s-master ~]# kubectl create -f grafana.deploy.yml deployment.apps/grafana created |

3.3.2. Service

定义YAML文件

[root@k8s-master ~]# vim grafana.svc.yml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

apiVersion: v1 kind: Service metadata: name: grafana namespace: kube-system labels: app: grafana component: core spec: type: NodePort ports: - port: 3000 selector: app: grafana |

创建Grafana的Service

|

1 2 |

[root@k8s-master ~]# kubectl create -f grafana.svc.yml service/grafana created |

3.3.3. Ingress

Kubernetes关于服务的暴露主要是通过NodePort方式,通过绑定minion主机的某个端口,然后进行pod的请求转发和负载均衡,但这种方式下缺陷是

Service可能有很多个,如果每个都绑定一个node主机端口的话,主机需要开放外围一堆的端口进行服务调用,管理混乱无法应用很多公司要求的防火墙规则

理想的方式是通过一个外部的负载均衡器,绑定固定的端口,比如80,然后根据域名或者服务名向后面的Service ip转发,Nginx很好的解决了这个需求,但问题是如果有新的服务加入,如何去修改Nginx的配置,并且加载这些配置? Kubernetes给出的方案就是Ingress,Ingress包含了两大主件Ingress Controller和Ingress.

Ingress解决的是新的服务加入后,域名和服务的对应问题,基本上是一个ingress的对象,通过yaml进行创建和更新进行加载。

Ingress Controller是将Ingress这种变化生成一段Nginx的配置,然后将这个配置通过Kubernetes API写到Nginx的Pod中,然后reload.

定义YAML文件

[root@k8s-master ~]# vim grafana.ing.yml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: grafana namespace: kube-system spec: rules: - host: k8s.grafana http: paths: - path: / backend: serviceName: grafana servicePort: 3000 |

创建Grafana的Ingress

|

1 2 |

[root@k8s-master ~]# kubectl create -f grafana.ing.yml ingress.extensions/grafana created |

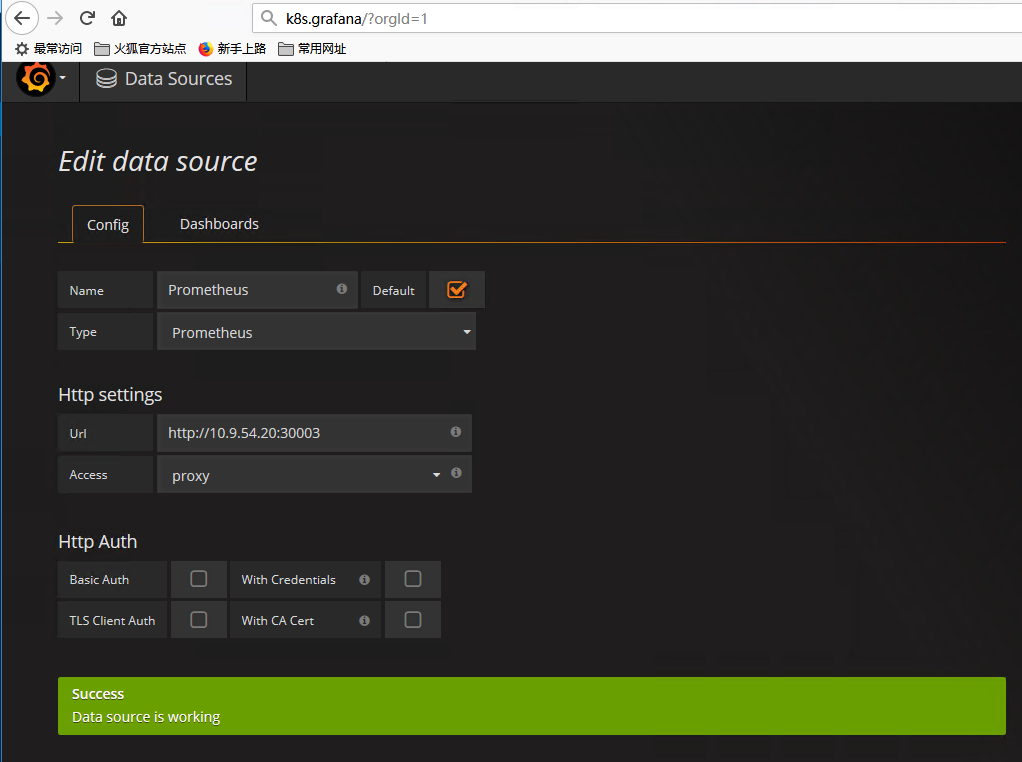

3.3.4. 配置Grafana

配置本地host,添加:10.9.54.20 k8s.grafana

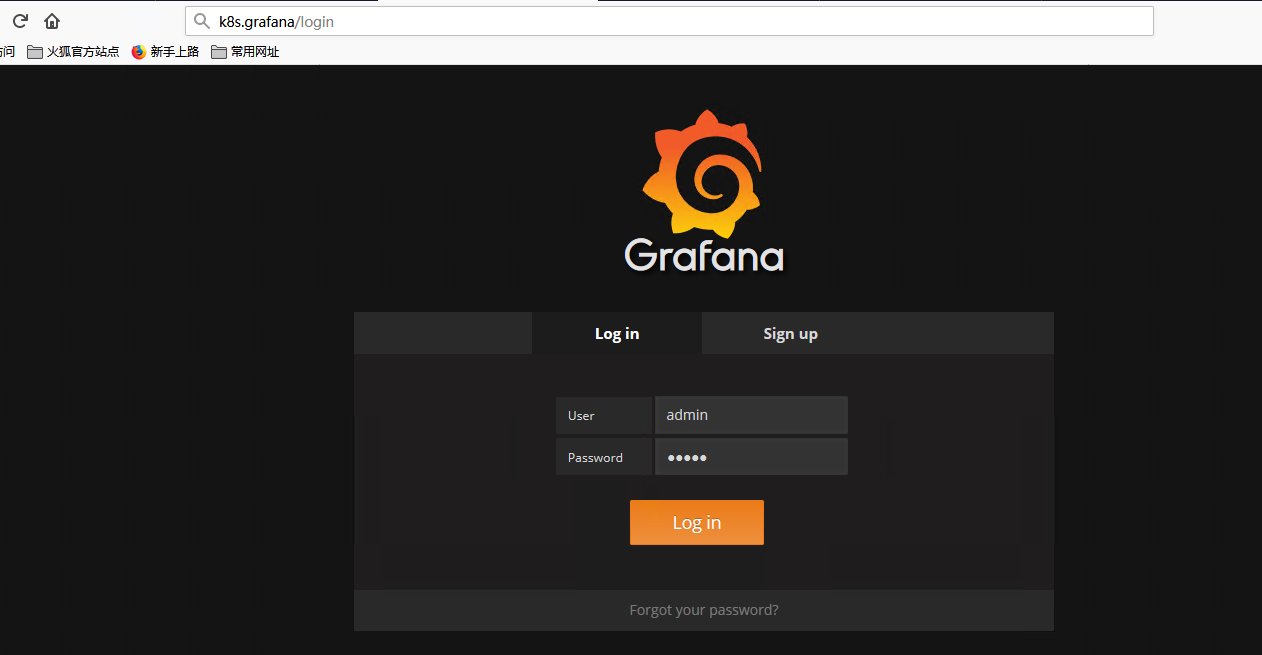

浏览器访问:http://k8s.grafana/login

默认用户名和密码都是admin

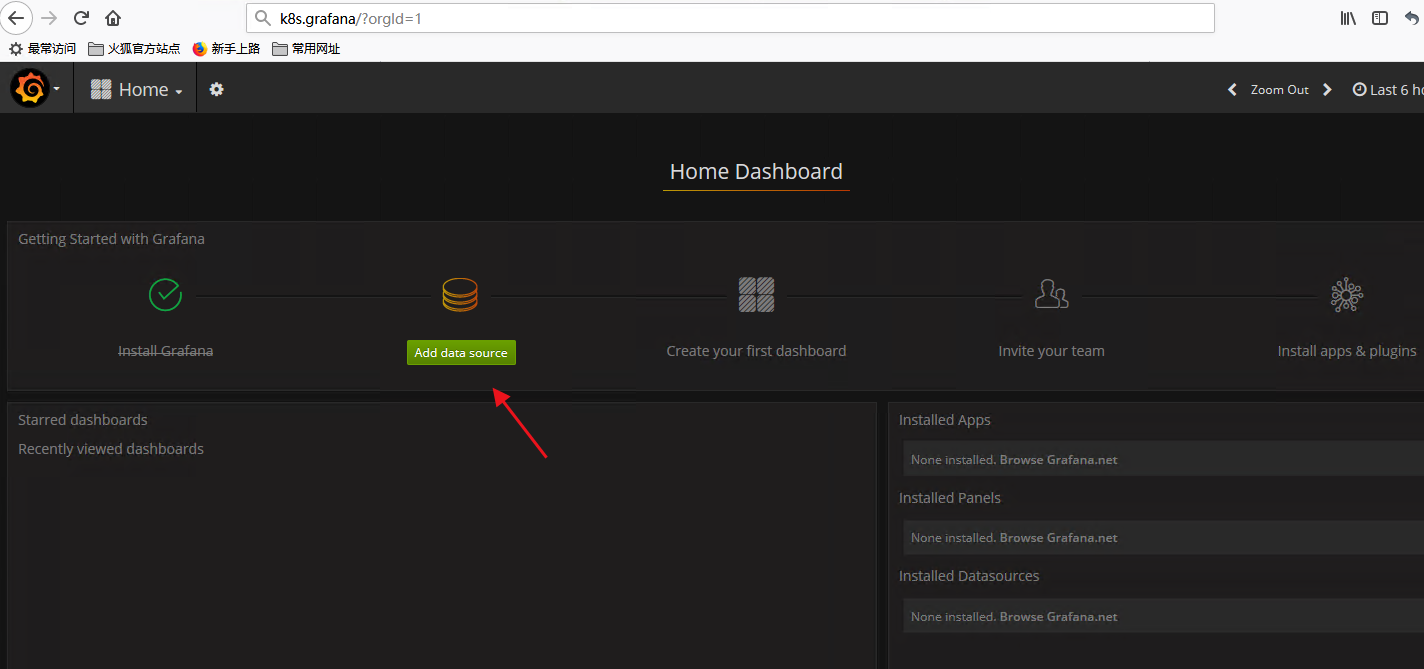

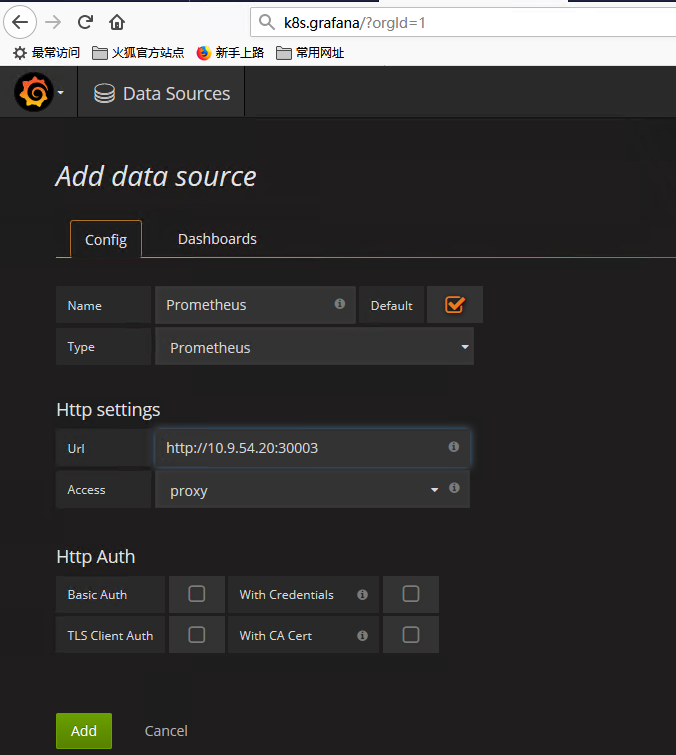

添加数据源

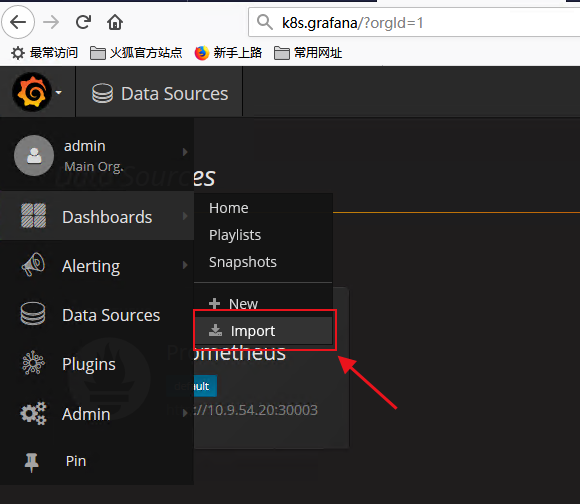

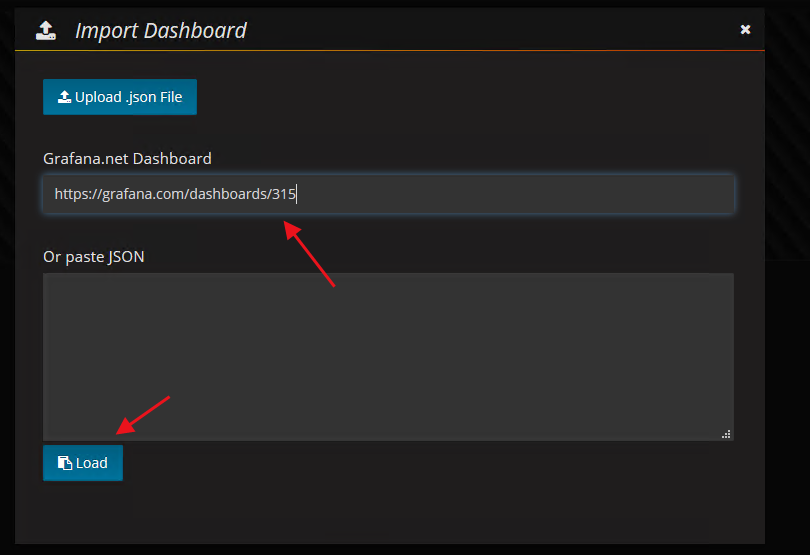

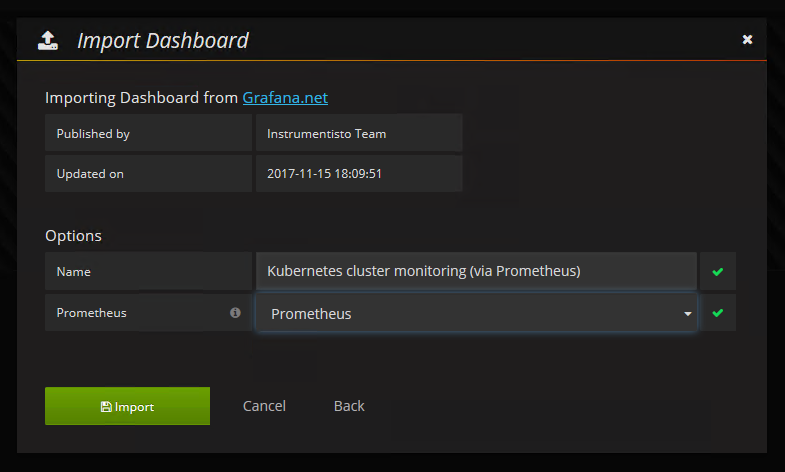

导入Dashboard模板

这里使用的是kubernetes集群模板,模板编号315,在线导入地址https://grafana.com/dashboards/315

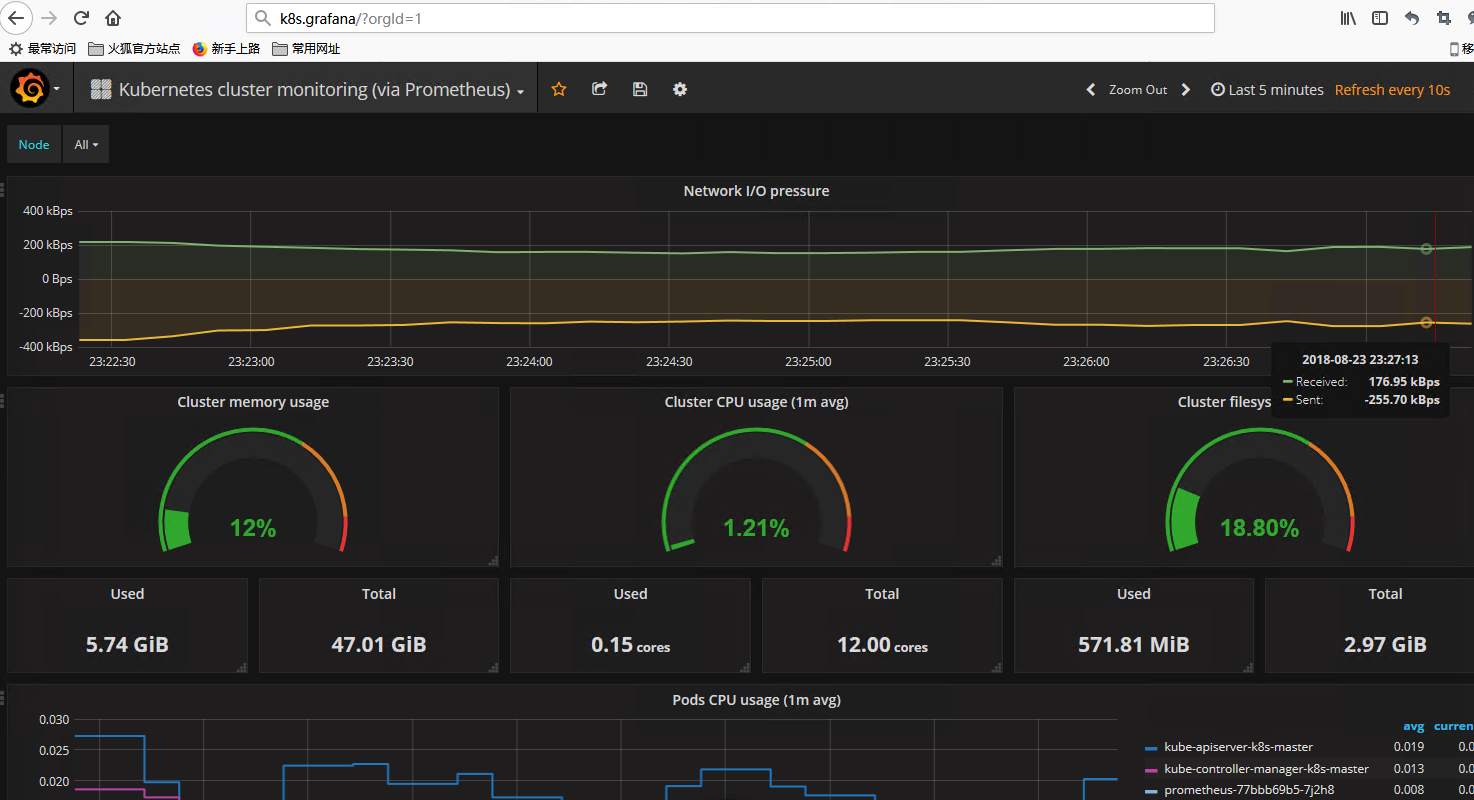

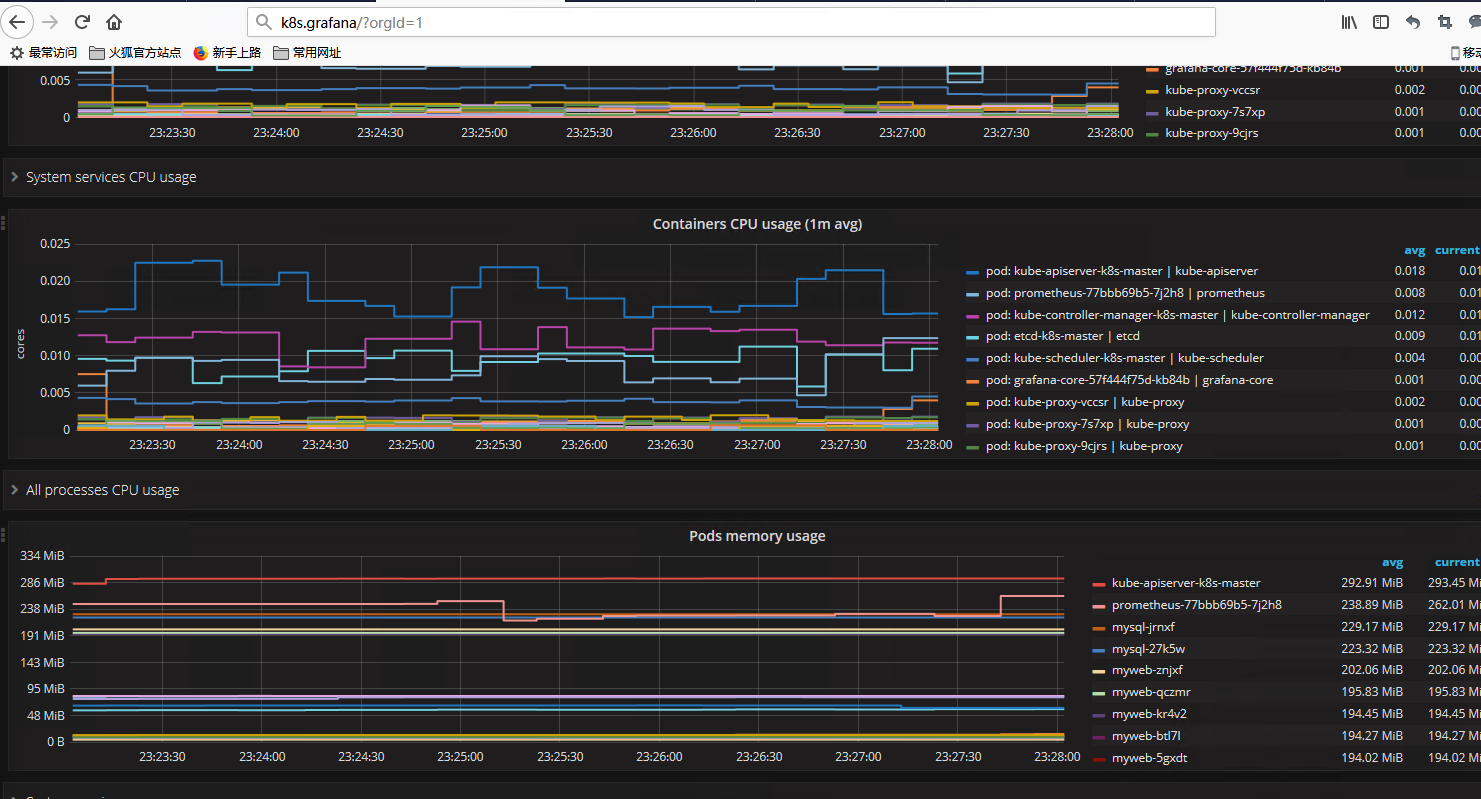

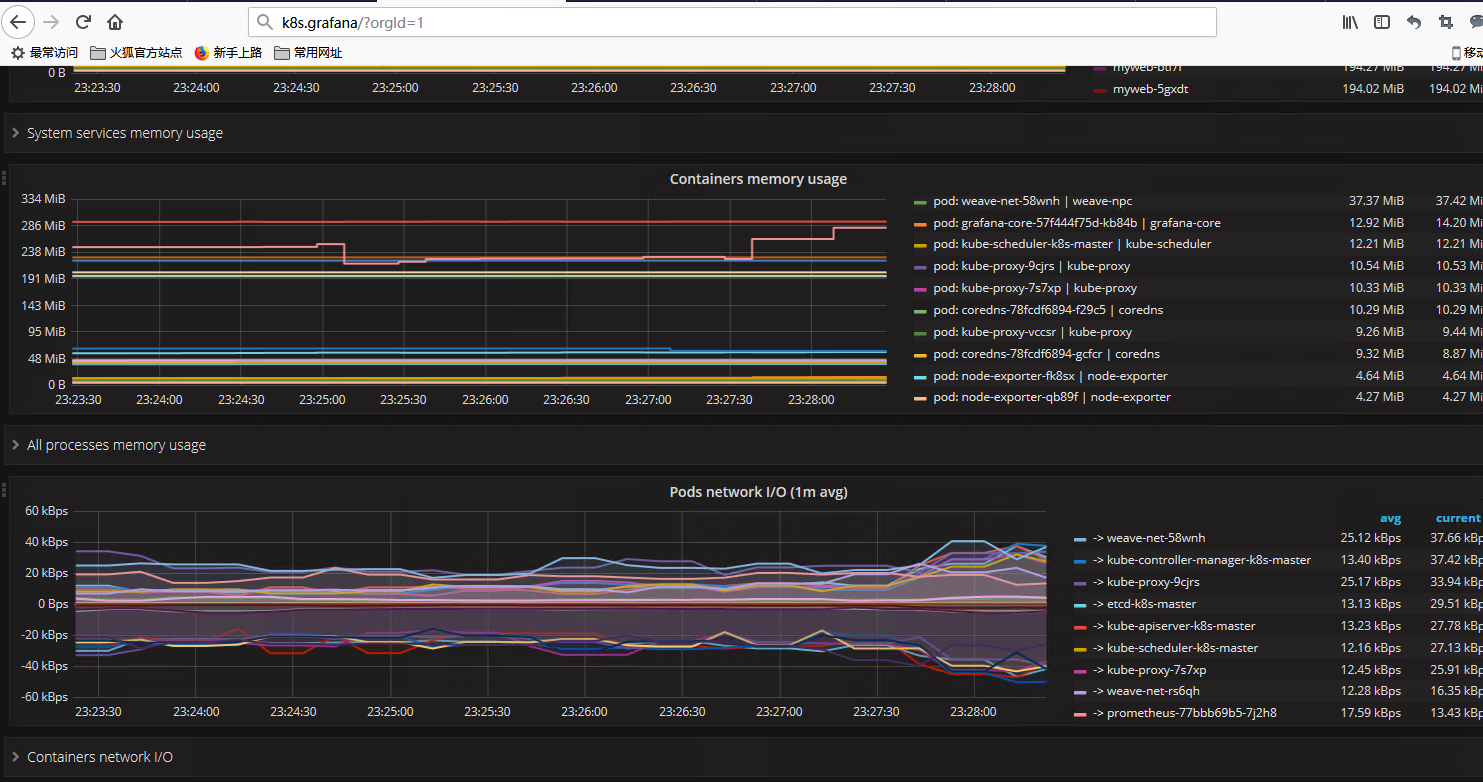

效果展示: